TexLexAn is an experimental set of 5 programs:

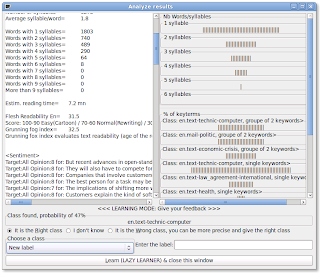

- The " analyser - classifier - summarizer " engine does the main job! The text is converted, tokenized, key terms (1-gram...n-grams) are searched, sentiments are extracted, the text is categorized (a linear classifier is used for this operation), and finally the most relevant sentences are extracted and simplified when it is possible. This program has just a CLI (no very user friendly), and it is written in language c and is strictly compliant to the Posix standard.

- The " learner " engine computes the weight of the terms and adds new terms to the knowledge base. It has just a CLI, is written in language c and is strictly compliant to the Posix standard.

- The " search " engine looks for sentence or keywords inside the list of summaries archived and retrieve the link to the original document. This program is written c in too and has just a command line interface (CLI).

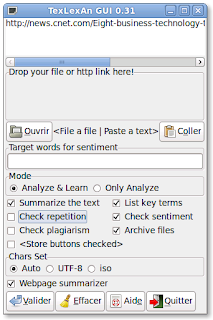

- The graphic front end of the analyser-classifier-summarizer program offers an user friendly interface, allowing the drag'n drop of file or http link and the setting of the most important options. It glues several programs such as wget, bzip, pdftotext, antiword, odt2txt, ppthtml, texlexan and learner engines.

- The graphic front end of the search program is very simple. It allows to enter the sentence or keywords searched, sets some search option and lauchs the search program. The results are automatically displayed in your default web brower.

Both graphic font end programs are written in python and use the gtk libraries.

No comments:

Post a Comment